Ankur HandaEmail / GitHub / Google Scholar / Posts / |

ResearchI work at the intersection of perception (computer vision) and control for robotics. My work relies heavily on machine-learning, optimisation and simulations (rendering and physics). I am currently a Research Scientist at NVIDIA Robotics and prior to that I was a Research Scientist at OpenAI and before that I obtained my PhD from Imperial College. HighlightsSome of my interesting works include VaFRIC and ICL-NUIM where we worked on trying to use computer graphics to benchmark tracking and SLAM systems. This was followed up by SceneNet and SceneNet RGB-D where we used computer graphics to do scene understanding (semantic segmentation) using neural networks. Recently, we looked at using GPU Accelerated Physics with Reinforcement Learning to understand how to close the sim-to-real gap. More recently, the work on DexPilot allows us to do entirely vision based teleoperation to perform many challenging robotics tasks. I also maintain the sim2realAIorg channel on twitter where I regularly post recent interesting work on sim-to-real and also blog about interesting stuff here at https://sim2realai.github.io/ (linked to the twitter channel). |

|

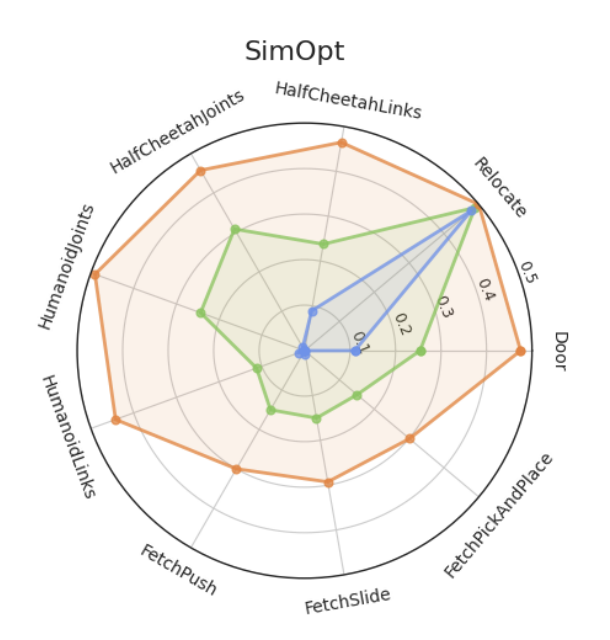

A User's Guide to Calibrating Robotics SimulatorsBhairav Mehta, Ankur Handa, Dieter Fox, Fabio Ramos Conference on Robot Learning (CoRL), 2020 arxiv / The paper explores various robotics simulator calibration techniques and puts in perspective the strengths and limitations of each. |

|

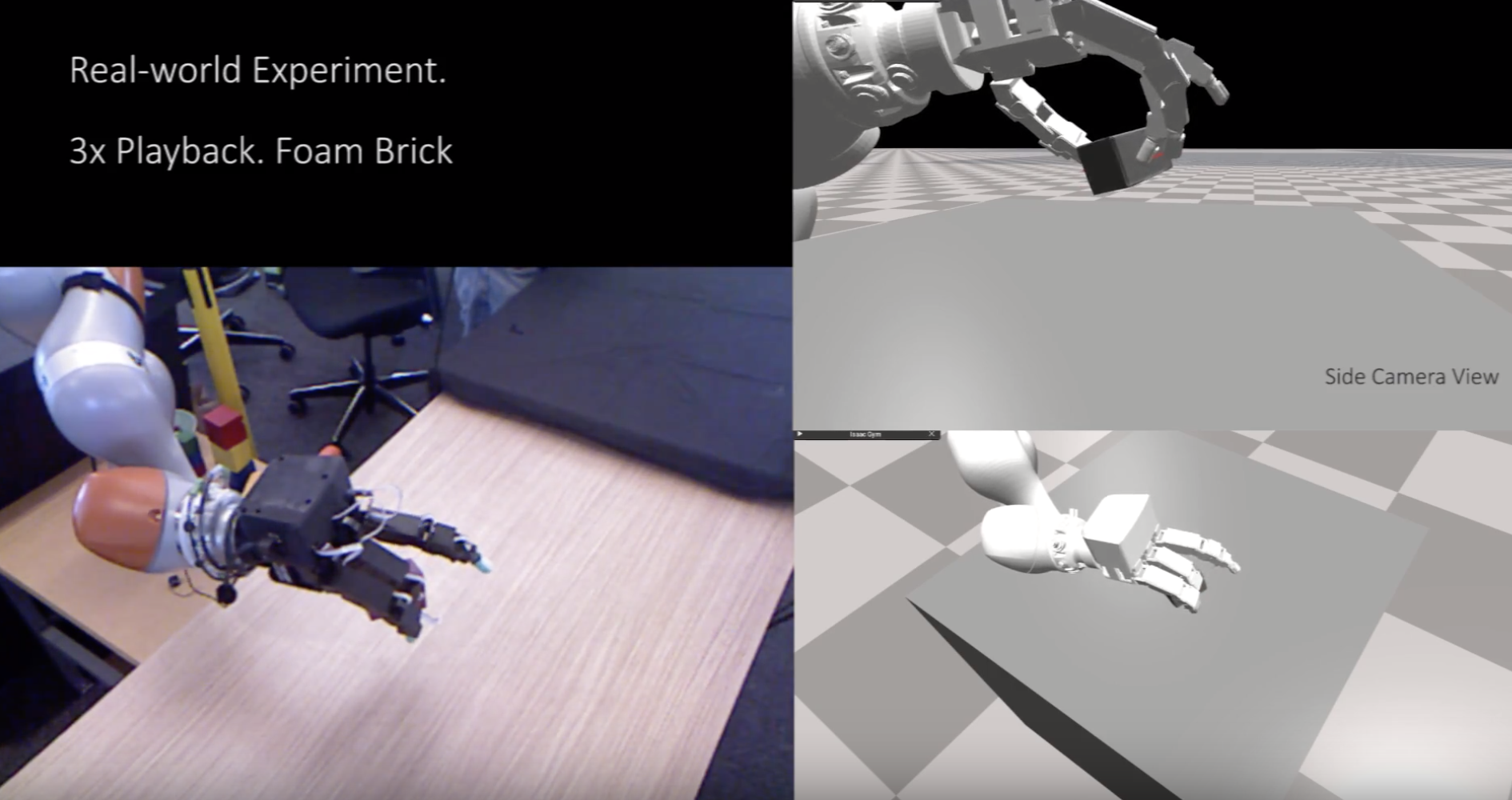

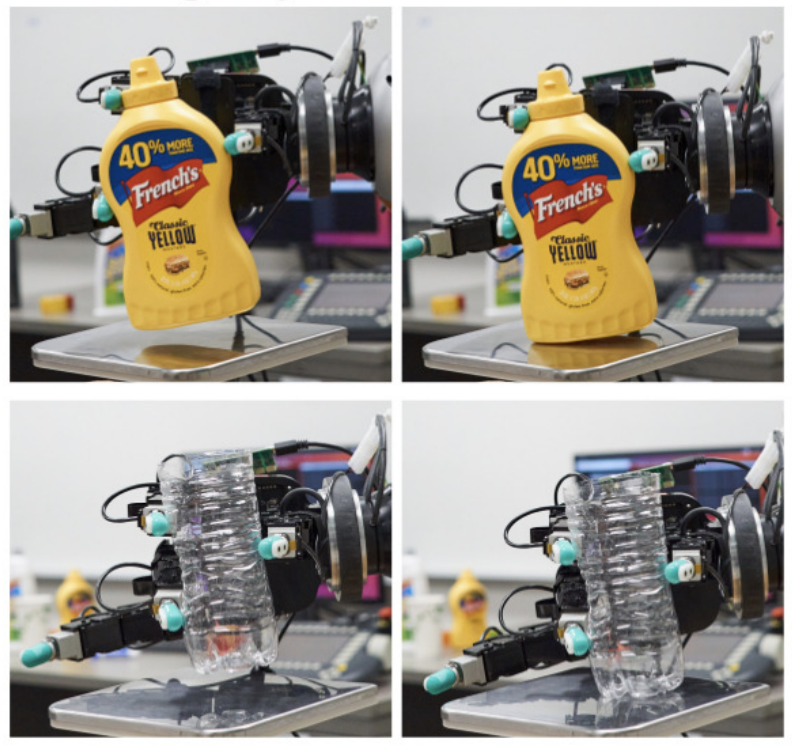

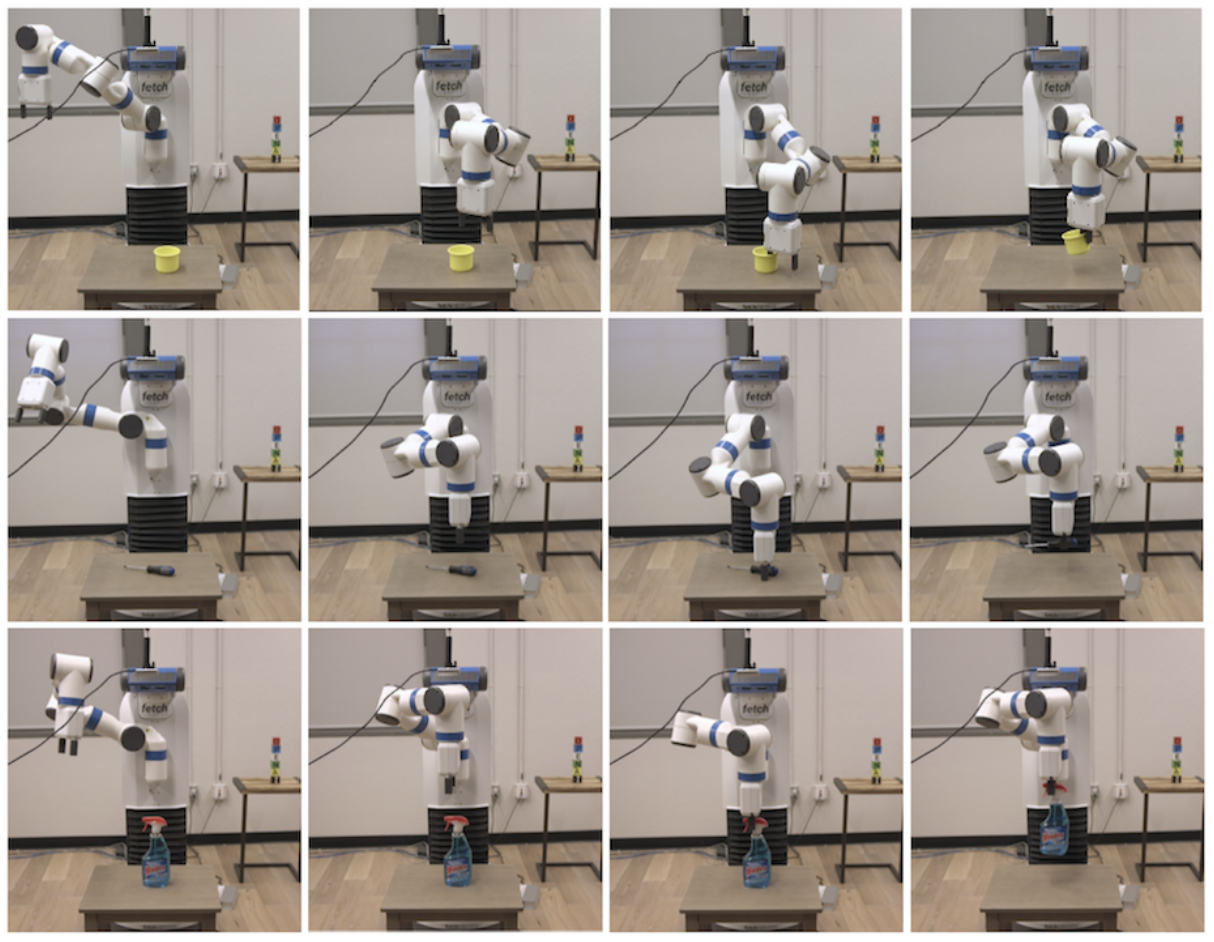

DexPilot: Vision Based Teleoperation of Dexterous Robotic Hand-Arm SystemAnkur Handa*, Karl Van Wyk*, Wei Yang, Jacky Liang, Yu-Wei Chao, Qian Wan, Stan Birchfield, Nathan Ratliff, Dieter Fox (* Equal Contribution) International Conference on Robotics and Automation (ICRA), 2020 arxiv / An entirely vision based teleoperation for robotic hand-arm system to collect data for imitation learning. The system works without any markers or gloves on the hand. |

|

Model-based Generalization under Parameter Uncertainty using Path Integral ControlIan Abraham, Ankur Handa, Nathan Ratliff, Kendall Lowrey, Todd D. Murphey, Dieter Fox Robotics and Automation Letter (RA-L) and International Conference on Robotics and Automation (ICRA), 2020 This work addresses the problem of robot interaction in complex environments where online control and adaptation is necessary. By expanding the sample space in the free energy formulation of path integral control, we derive a natural extension to the path integral control that embeds uncertainty into action and provides robustness for model-based robot planning. |

|

In-Hand Object Pose Tracking via Contact Feedback and GPU-Accelerated Robotic SimulationJacky Liang, Ankur Handa, Karl Van Wyk, Viktor Makoviychuk, Oliver Kroemer, Dieter Fox International Conference on Robotics and Automation (ICRA), 2020 In this work, we propose using GPU-accelerated parallel robot simulations and derivative-free, sample-based optimizers to track in-hand object poses with contact feedback during manipulation. We use physics simulation as the forward model for robot-object interactions, and the algorithm jointly optimizes for the state and the parameters of the simulations, so they better matchwith those of the real world. |

|

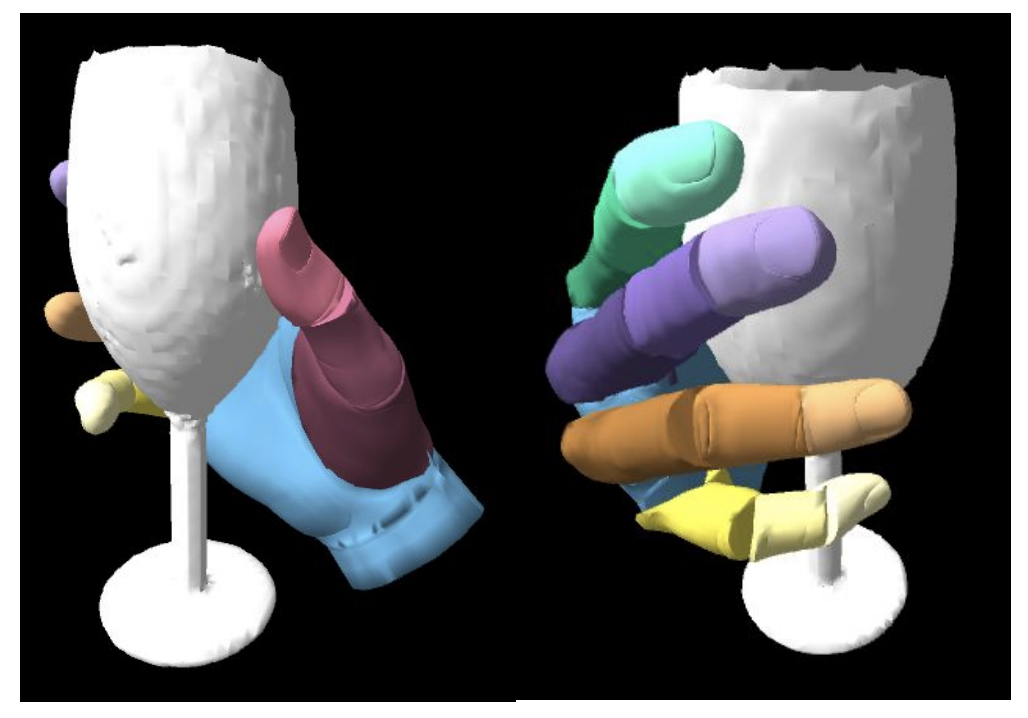

ContactGrasp: Functional Multi-finger Grasp Synthesis from ContactSamarth Brahmbhatt, Ankur Handa, James Hays and Dieter Fox International Conference on Intelligent Robots and Systems (IROS), 2019 arxiv / We present ContactGrasp, a framework for functional grasp synthesis from object shape and contact on the object surface. Contact can be manually specified or obtained through demonstrations. Our contact representation is object-centric and allows functional grasp synthesis even for hand models different than the one used for demonstration. |

|

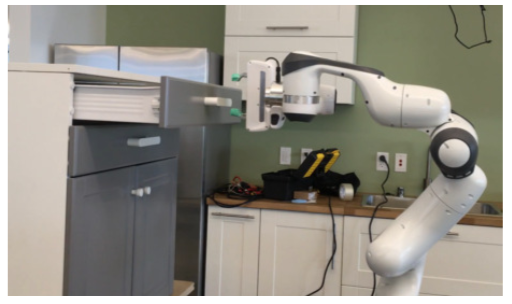

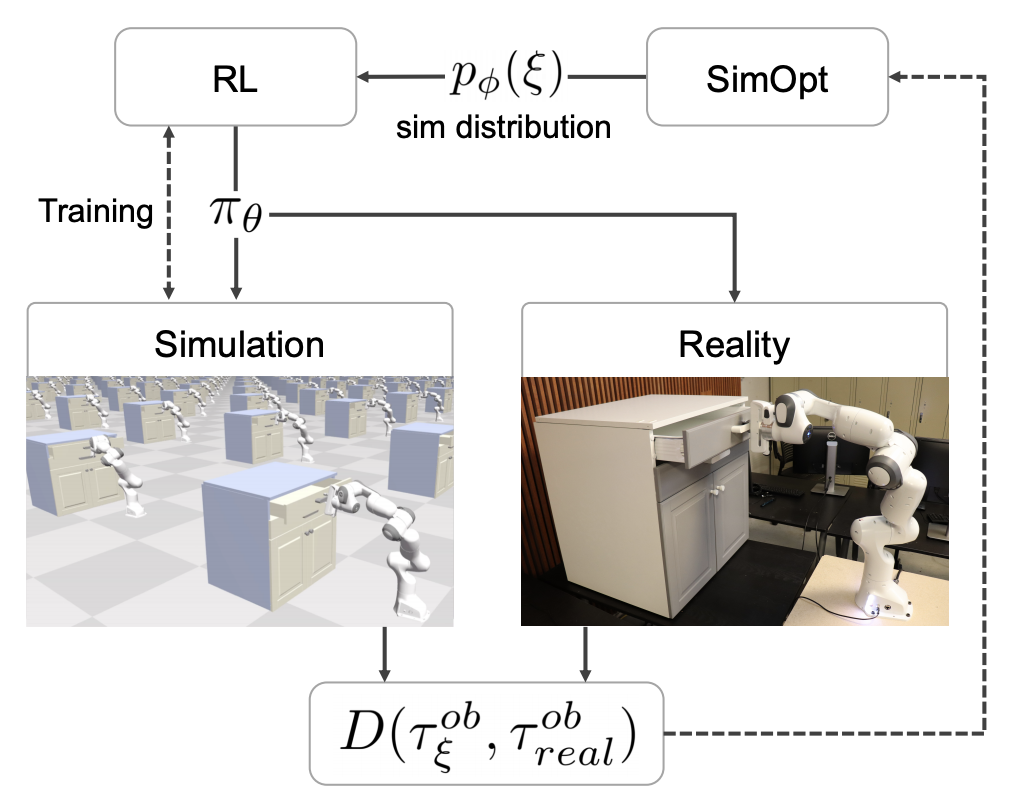

Closing the Sim-to-Real Loop: Adapting Simulation Randomization with Real World ExperienceYevgen Chebotar, Ankur Handa, Viktor Makoviychuk, Miles Macklin, Jan Isaac, Nathan Ratliff, Dieter Fox International Conference on Robotics and Automation (ICRA), 2019 arxiv / The system closes the loop between simulations and real-world by training first in simulations and then testing it out in real-world. The real world data is then used to adjust the variances of the parameters that are randomised while training a policy with PPO. Best Student Paper Award Finalist. VentureBeat, IEEE Spectrum, Robotics & Automation News,ICRA award link |

|

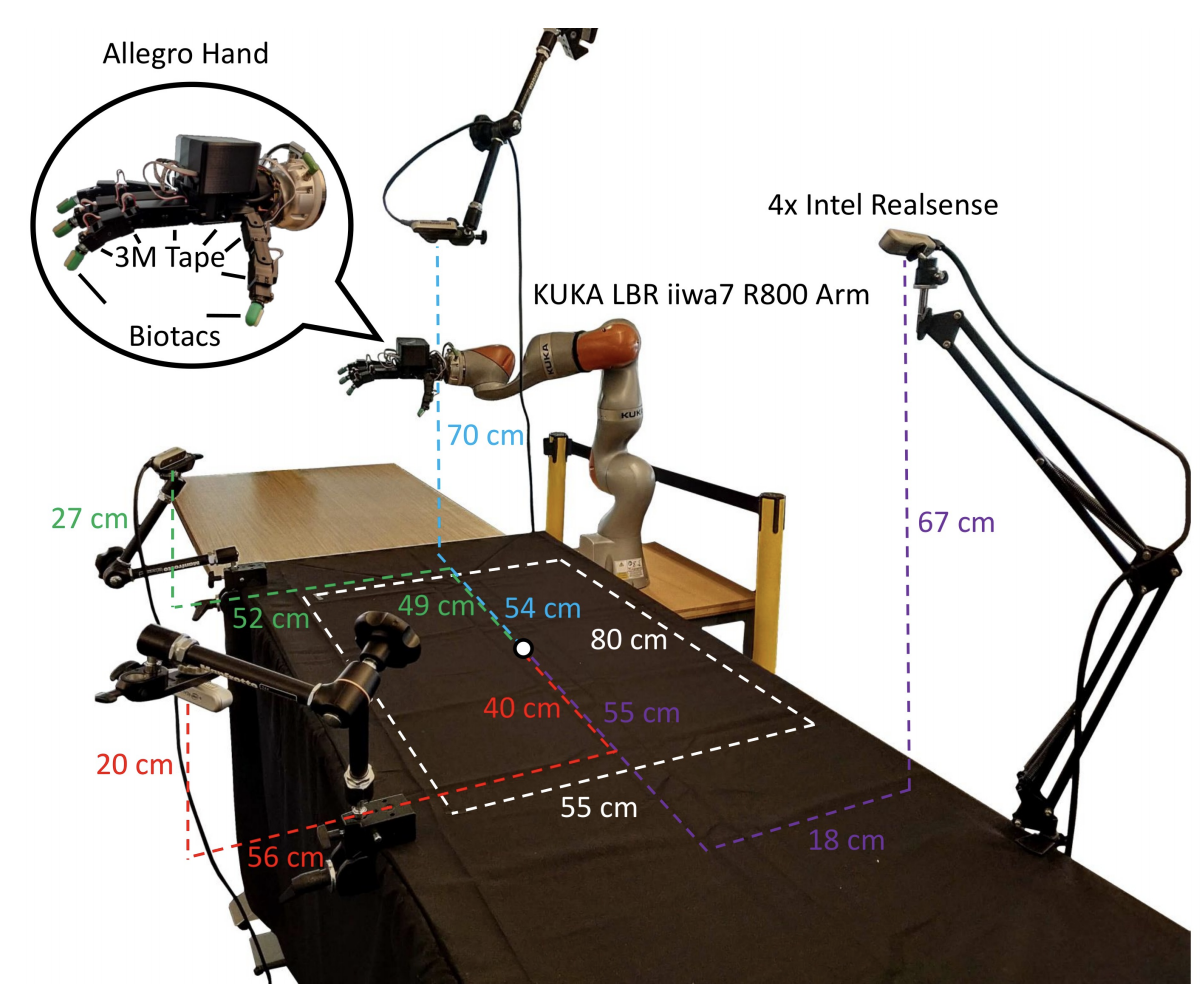

Robust Learning of Tactile Force Estimation through Robot InteractionBalakumar Sundaralingam, Alexander (Sasha) Lambert, Ankur Handa, Byron Boots, Tucker Hermans, Stan Birchfield, Nathan Ratliff, Dieter Fox International Conference on Robotics and Automation (ICRA), 2019 arxiv / In this paper, we explore learning a robust model that maps tactile sensor signals to force. We specifically explore learning a mapping for the SynTouch BioTac sensor via neural networks. Best Manipulation Paper Award Finalist. |

|

Learning Latent Space Dynamics for Tactile ServoingGiovanni Sutanto, Nathan Ratliff, Balakumar Sundaralingam, Yevgen Chebotar, Zhe Su, Ankur Handa, Dieter Fox International Confernece on Robotics and Automation (ICRA), 2019 arxiv / In this paper, we specifically address the challenge of tactile servoing, i.e. given the current tactile sensing and a target/goal tactile sensing – memorized from a successful task execution in the past – what is the action that will bring the current tactile sensing to move closer towards the target tactile sensing at the next time step. |

|

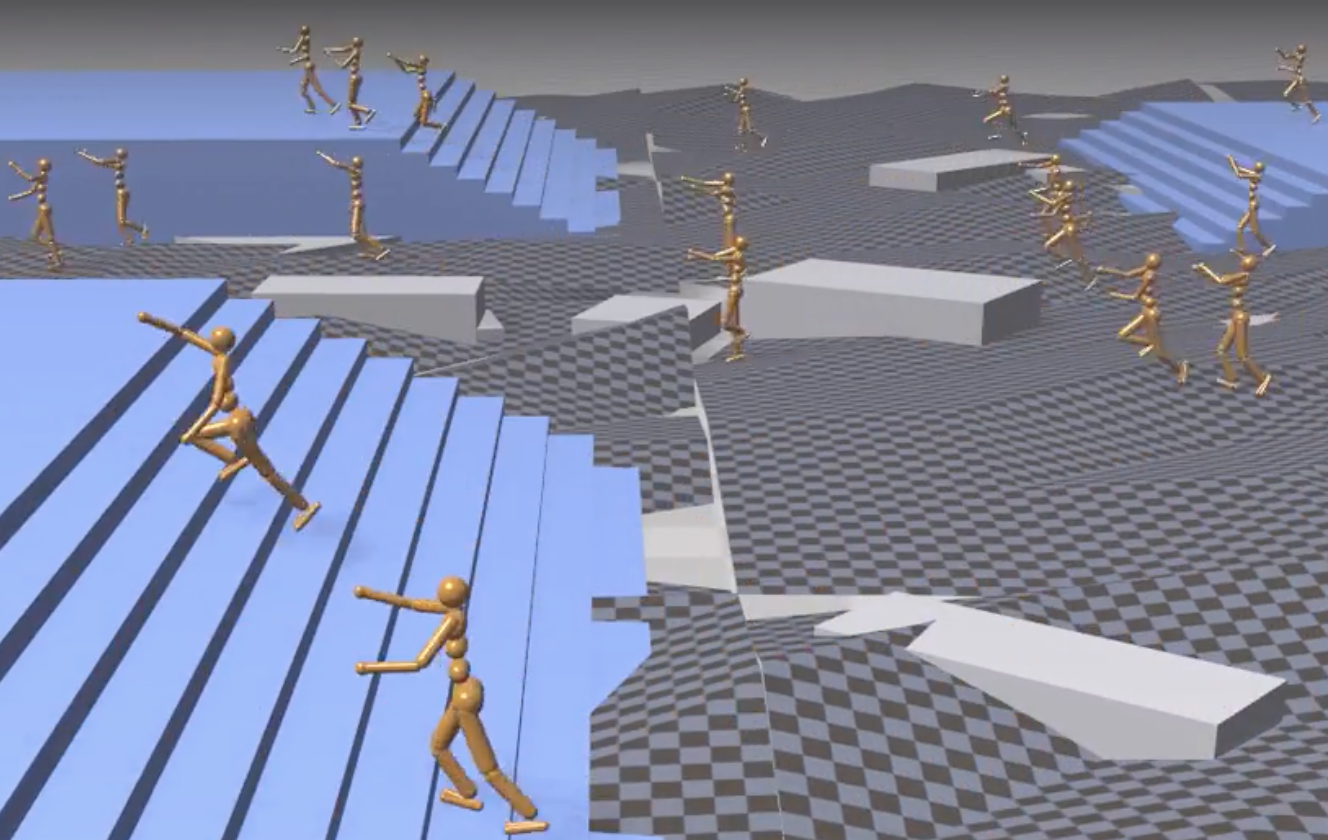

GPU-Accelerated Robotic Simulation for Distributed Reinforcement LearningAnkur Handa*, Jacky Liang*, Viktor Makoviychuk*, Nuttapong Chentanez, Miles Macklin, Dieter Fox (*Equal Contribution) Conference on Robot Learning (CoRL), 2018 arxiv / In this work, we propose using GPU-accelerated RL simulations as an alternative to CPU ones. Using NVIDIA Flex, a GPU-based physics engine, we show promising speed-ups of learning various continuous-control, locomotion tasks. With one GPU and CPU core, we are able to train the Humanoid running task with PPO in less than 20 minutes, using 10-1000x fewer CPU cores than previous works. Spotlight |

|

Domain randomization and generative models for robotic graspingJosh Tobin, Lukas Biewald, Rocky Duan, Marcin Andrychowicz, Ankur Handa, Vikash Kumar, Bob McGrew, Alex Ray, Jonas Schneider, Peter Welinder, Wojciech Zaremba, Pieter Abbeel International Conference on Intelligent Robots and Systems (IROS), 2018 arxiv / In this work, we explore a novel data generation pipeline for training a deep neural network to perform grasp planning that applies the idea of domain randomization to object synthesis. We generate millions of unique, unrealistic procedurally generated objects, and train a deep neural network to perform grasp planning on these objects. |

|

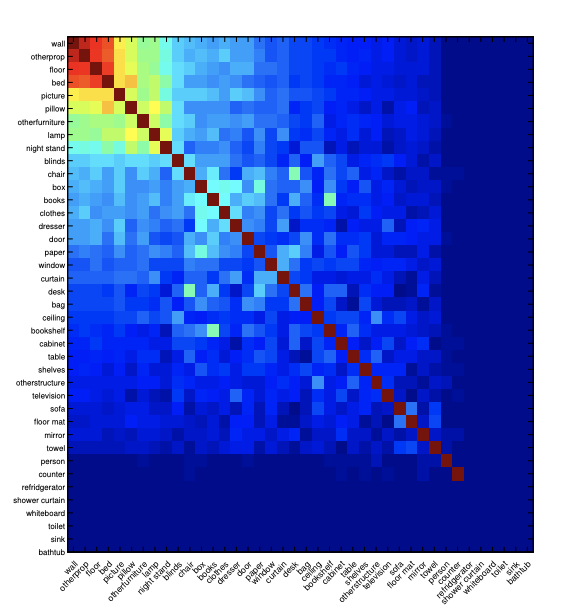

SceneNet RGB-D: Can 5M Synthetic Images Beat Generic ImageNet Pre-training on Indoor Segmentation?John McCormac, Ankur Handa, Stefan Leutenegger, Andrew J. Davison International Conference on Computer Vision (ICCV), 2017 arxiv / We propose a new dataset of 5M indoor images with photorealistic rendering and ground truth for segmentation, depth and optical flow. By training on this huge dataset we are able to show that we can beat a network pre-trained on ImageNet on indoor scene segmentation. |

|

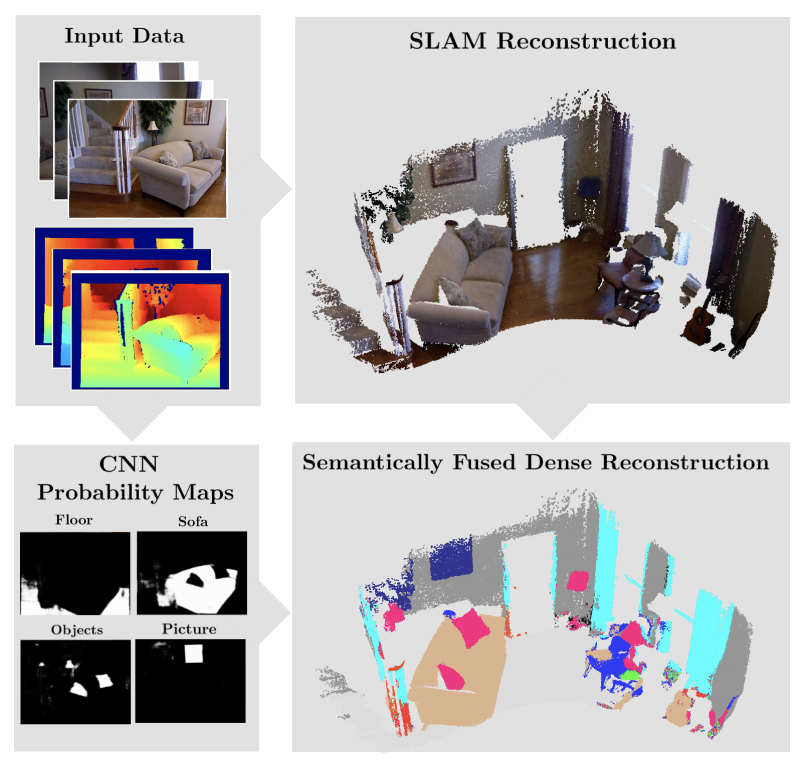

SemanticFusion: Dense 3D Semantic Mapping with Convolutional Neural NetworksJohn McCormac, Ankur Handa, Stefan Leutenegger, Andrew J. Davison International Conference on Robotics and Automation (ICRA), 2017 arxiv / We combine Convolutional Neural Networks (CNNs) and a state of the art dense Simultaneous Localisation and Mapping(SLAM) system, ElasticFusion, which provides long-term dense correspondence between frames of indoor RGB-D video even during loopy scanning trajectories. These correspondences allow the CNN’s semantic predictions from multiple view points to be probabilistically fused into a map. Our system is efficient enough to allow real-time interactive use at frame-rates of approximately 25Hz. |

|

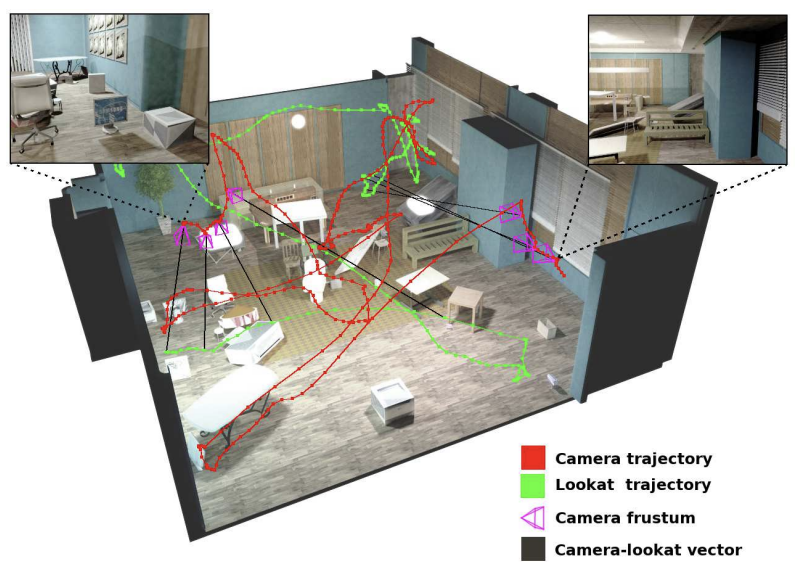

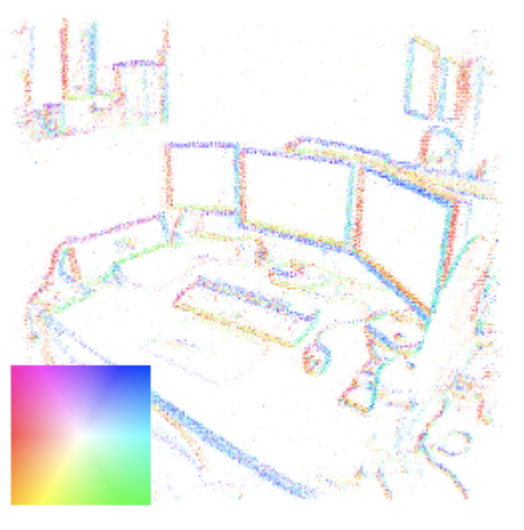

SceneNet RGB-D: 5M Photorealistic Images of Synthetic Indoor Trajectories with Ground TruthJohn McCormac, Ankur Handa, Stefan Leutenegger, Andrew J. Davison arxiv, 2016 arxiv / We introduce SceneNet RGB-D, expanding the previous work of SceneNet to enable large scale photorealistic rendering of indoor scene trajectories. It provides pixel-perfect ground truth for scene understanding problems such as semantic segmentation, instance segmentation, and object detection, and also for geometric computer vision problems such as optical flow, depth estimation, camera pose estimation, and 3D reconstruction. Random sampling permits virtually unlimited scene configurations, and here we provide a set of 5M rendered RGB-D images from over 15K trajectories in synthetic layouts with random but physically simulated object poses. |

|

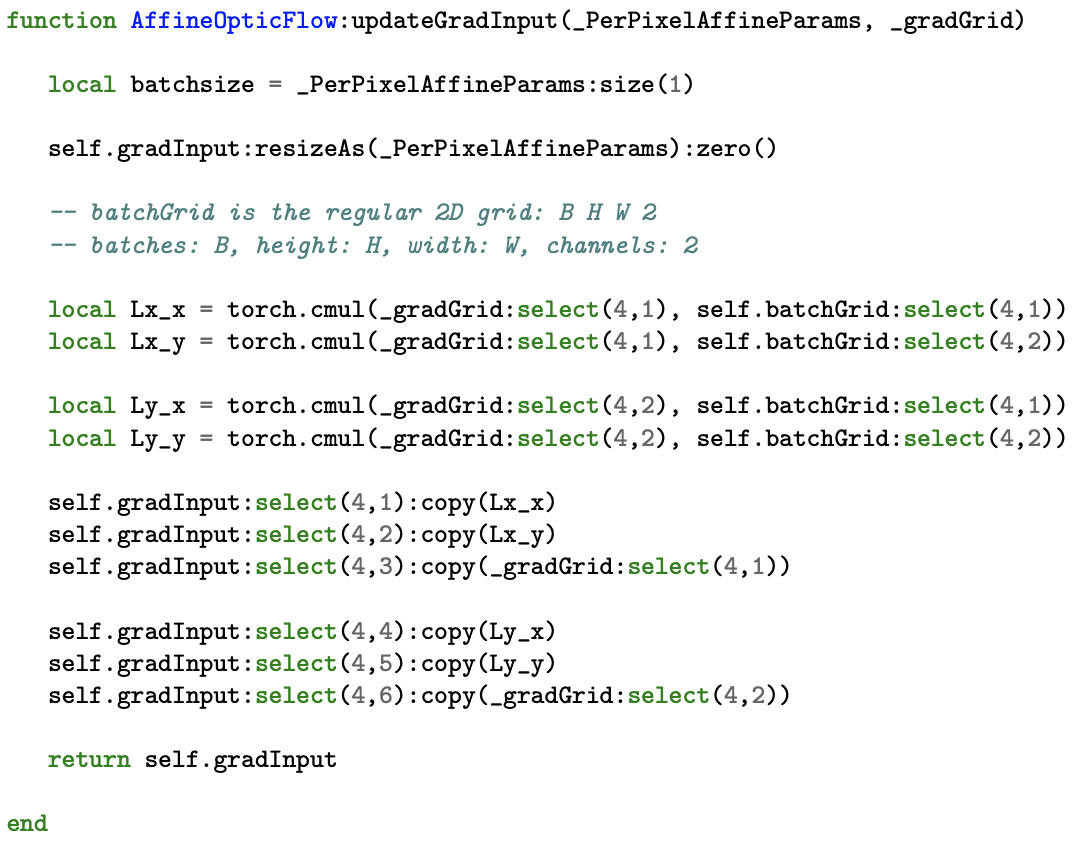

gvnn: Neural Network Library for Geometric Computer VisionAnkur Handa, Michael Bloesch, Viorica Patraucean, Simon Stent, John McCormac, Andrew Davison European Conference on Computer Vision (Workshop): Geometry meets Deep Learning, 2016 arxiv / We introduce gvnn, a neural network library in Torch aimed towards bridging the gap between classic geometric computer vision and deep learning. Inspired by the recent success of Spatial Transformer Networks, we propose several new layers which are often used as parametric transformations on the data in geometric computer vision. |

|

Understanding Real World Indoor Scenes With Synthetic DataAnkur Handa, Viorica Patraucean, Vijay Badrinarayanan, Simon Stent, Roberto Cipolla IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016 arxiv / In this work, we focus our attention on depth based semantic per-pixel labelling as a scene understanding problem and show the potential of computer graphics to generate virtually unlimited labelled data from synthetic 3D scenes. |

|

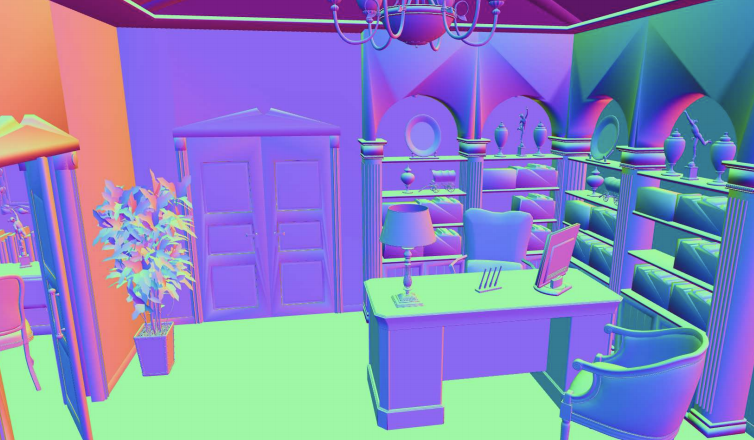

SceneNet: an Annotated Model Generator for Indoor Scene UnderstandingAnkur Handa, Viorica Patraucean, Simon Stent, Roberto Cipolla International Conference on Robotics and Automation (ICRA), 2016 arxiv / We introduce SceneNet, a framework for generating high-quality annotated 3D scenes to aid indoor scene understanding. SceneNet leverages manually-annotated datasets of real world scenes such as NYUv2 to learn statistics about object co-occurrences and their spatial relationships. |

|

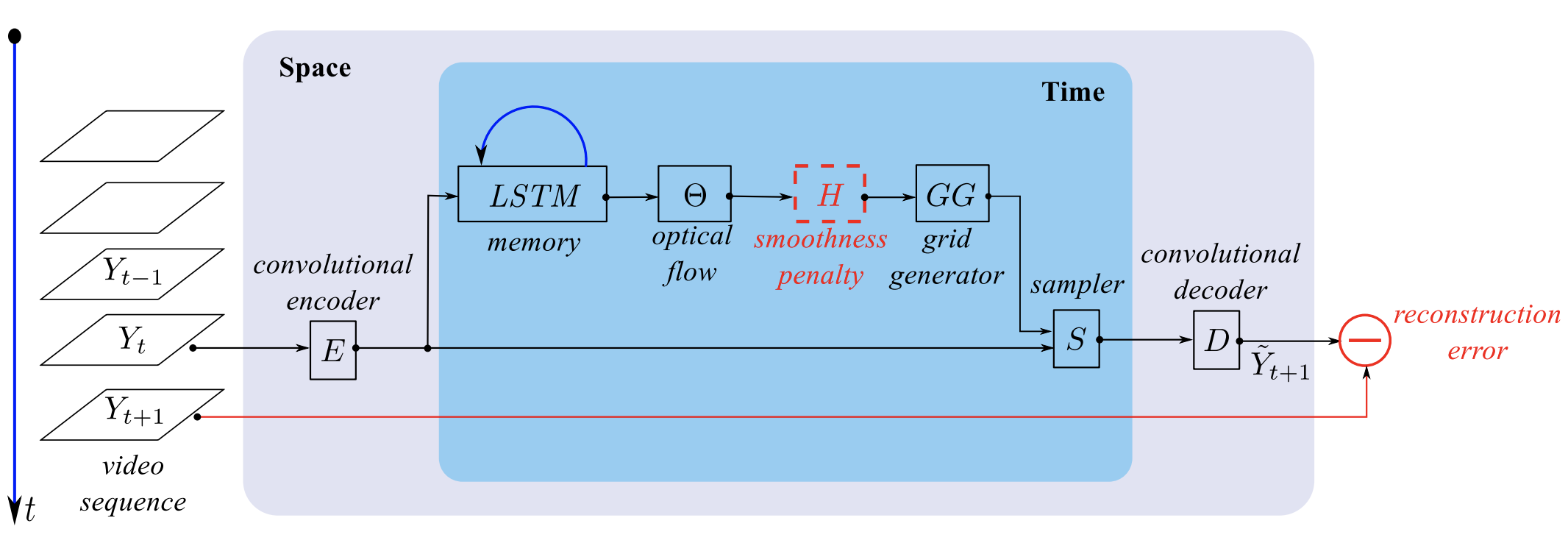

Spatio-Temporal Video Autoencoder With Differentiable MemoryViorica Patraucean, Ankur Handa, Roberto Cipolla International Conference on Learning and Representations Workshop Track (ICLRW), 2016 arxiv / We describe a new spatio-temporal video autoencoder, based on a classic spatial image autoencoder and a novel nested temporal autoencoder. The temporal encoder is represented by a differentiable visual memory composed of convolutional long short-term memory (LSTM) cells that integrate changes over time. |

|

HDRFusion: HDR SLAM using a low-cost auto-exposure RGB-D sensorShuda Li, Ankur Handa, Yang Zhang, Andrew Calway International Conference on 3D Vision (3DV), 2016 arxiv / We describe a new method for comparing frame appearance in a frame-to-model 3-D mapping and tracking system using an low dynamic range (LDR) RGB-D camera which is robust to brightness changes caused by auto exposure. |

|

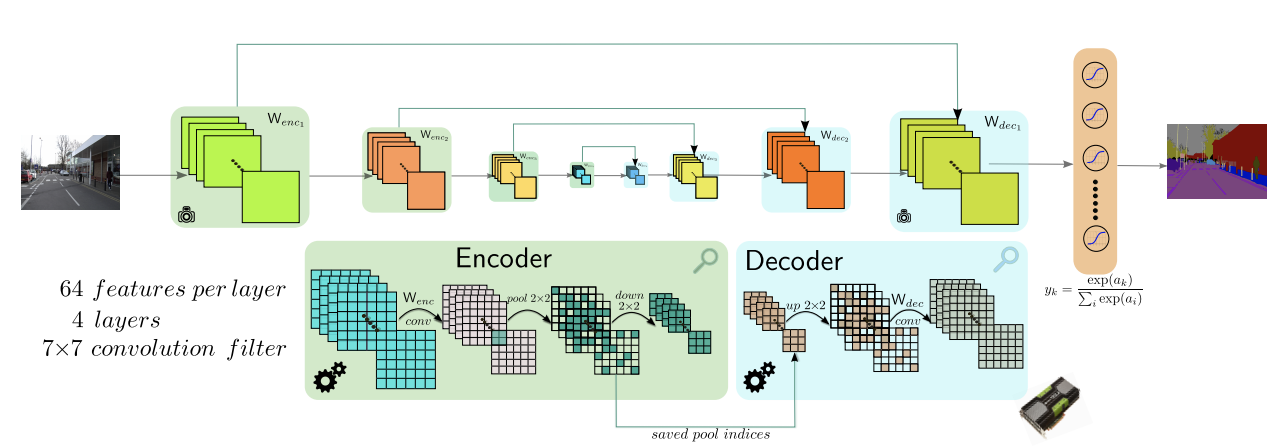

SegNet: A Deep Convolutional Encoder-Decoder Architecture for Robust Semantic Pixel-Wise LabellingVijay Badrinarayanan, Ankur Handa, Roberto Cipolla arXiv, 2015 arxiv / We propose a novel deep architecture, SegNet, for semantic pixel wise image labelling. SegNet is composed of a stack of encoders followed by a corresponding decoder stack which feeds into a soft-max classification layer. The decoders help map low resolution feature maps at the output of the encoder stack to full input image size feature maps. This addresses an important drawback of recent deep learning approaches which have adopted networks designed for object categorization for pixel wise labelling. |

|

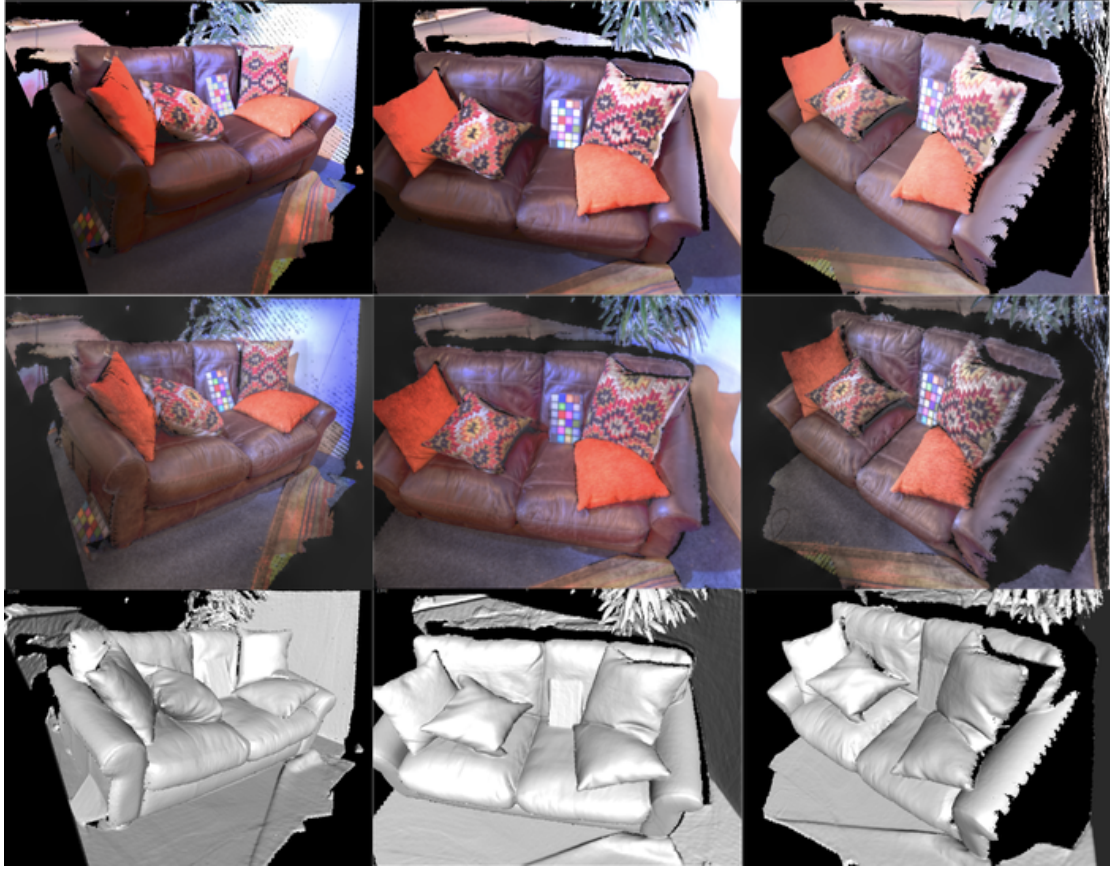

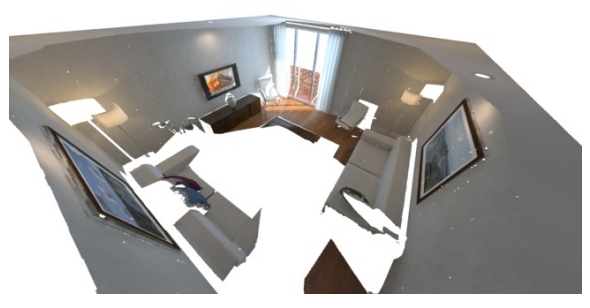

A Benchmark for RGB-D Visual Odometry, 3D Reconstruction and SLAMAnkur Handa, Thomas Whelan, John McDonald and Andrew J. Davison Interntaional Conference on Robotics and Automation (ICRA), 2014 arxiv / We introduce the Imperial College London and National University of Ireland Maynooth (ICL-NUIM) dataset for the evaluation of visual odometry, 3D reconstruction and SLAM algorithms that typically use RGB-D data. We present a collection of handheld RGB-D camera sequences within synthetically generated environments. RGB-D sequences with perfect ground truth poses are provided as well as a ground truth surface model that enables a method of quantitatively evaluating the final map or surface reconstruction accuracy. |

|

Simultaneous Mosaicing and Tracking with an Event CameraHanme Kim, Ankur Handa, Ryad Benosman, Sio-Hoi Ieng, Andrew J. Davison British Machine Vision Conference (BMVC), 2014 arxiv / We show for the first time that an event stream, with no additional sensing, can be used to track accurate camera rotation while building a persistent and high quality mosaic of a scene which is super-resolution accurate and has high dynamic range. Best Industry Paper Award Winner. |

|

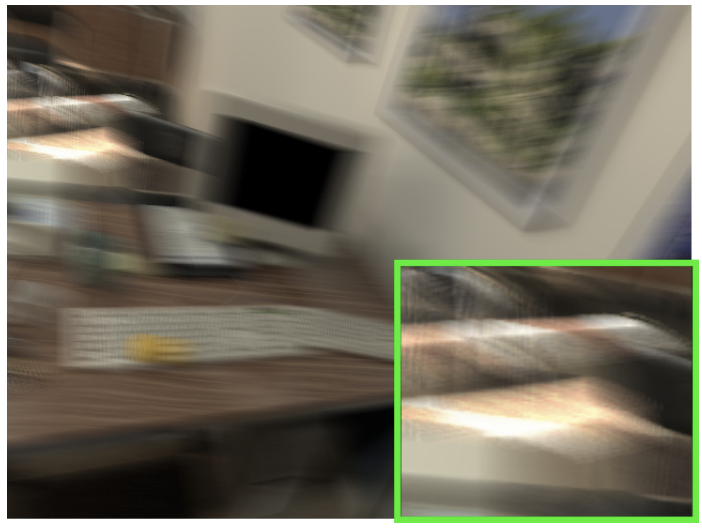

Real-Time Camera Tracking: When is High Frame-Rate Best?Ankur Handa, Richard A. Newcombe, Adrien Angeli and Andrew J. Davison European Conference on Computer Vision (ECCV), 2012 arxiv / Using 3D camera tracking as our test problem, and analysing a fundamental dense whole image alignment approach, we open up a route to a systematic investigation via the careful synthesis of photorealistic video using ray-tracing of a detailed 3D scene, experimentally obtained photometric response and noise models, and rapid camera motions. |

|

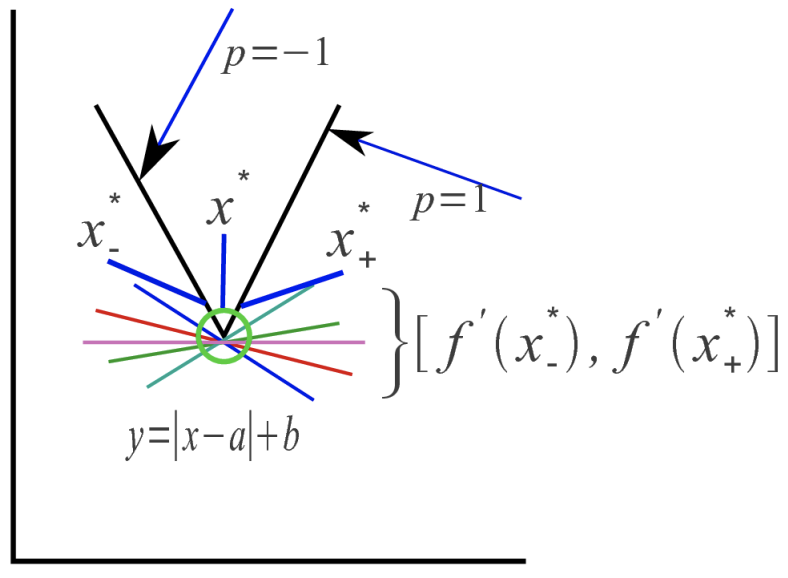

Applications of Legendre-Fenchel transformation to computer vision problemsAnkur Handa, Richard A. Newcombe, Adrien Angeli and Andrew J. Davison Departmental Technical Report, 2011 arxiv / This report presents a tutorial like walk through of the famous Pock and Chambolle primal-dual algorithm and provides a background on the fundametal legendre-fenchel transform that is a building block of the algorithm. |

|

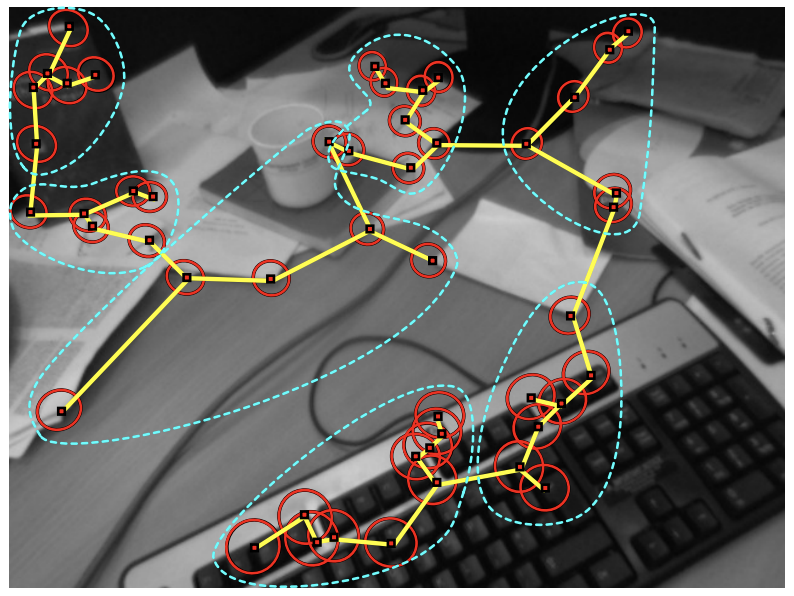

Scalable Active MatchingAnkur Handa, Margarita Chli, Hauke Strasdat and Andrew J. Davison Computer Vision and Pattern Recognition (CVPR), 2010 arxiv / We propose two variants called SubAM and CLAM in the context of camera tracking by sequential feature matching with rigorous decisions guided by Information Theory. These variants allow us to match significantly more features than the original AM (Active Matching) algorithm. |

|

Design and source code from Jon Barron's website |